Linux basics session 4

PATH types:- absolute paths - start with '/' (pronounced "root") and describe complete filesystem path for a file (directory), e.g. /usr/share

- relative paths - does not start with '/' they represent path relative to the current working directory (that's usually visible in the prompt in blue color or run

pwdto see it)

to avoid confusion between system wide commands and relative paths, it's a good habit to start relative path by './' which means current directory especially when we want to use TAB to see current directory suggestions

to express relative navigation to a directory one level up '../' is used'../../' is a relative path two directories up from my current working directory

special case is relative path starting with '~', which is an alias for you homedir, so path is relative to your home, to '/home/vasek' in my caseDon't forget that TAB key is always your friend

Make a copy of words file, open editor on a line

cd - navigate to your home dir

cp <source file path> <destination file path> - if destination is a directory, source filename is preserved (

cp /usr/share/dict/words ~/work - this will create a new file called "words" complete path to the new file is /home/vasek/work/words

cd ./work``grep -n "laboratory" ./words - search for "laboratory" pattern in a file and display a line number

nano +59725 ./words - opens editor on a specific line

Pipes

Piping each command in terminal has STDIN (standard input) and STDOUT (standard output)output of one command can be "piped" into input of another command, piped commands are separated by '|'

processing STDIN is at command's discretion, usually we can either use STDIN from previous command or provide

Displaying partial text file contentcat <file> - print whole file content - for long files this is not practicalhead -20 <file> - print first 20 linestail -20 <file> - print last 20 lines of a filehead -100 <file> | tail -20 - display lines 80-100 of a file (note tail receives data from STDIN and does not have

grep <pattern> <file> -B 10 -A 5 - grep options "B" (before) and "A" (after) are used to display surrounding lines of every matched line

shuf <file> - displays lines of a file in a random ordershuf <file> | head -5 displays random 5 lines from a file

STDOUT redirection

Standard output of any command (what we see in console) can be redirected to a file by adding '>

shuf ./words | head -5 > ./words01 - creates a new file 'words01' containing 5 random lines from 'words' file

Everytime '>' redirection is used, whole file content is replaced with complete output of the command.Sometimes we want to keep old data and append new lines to the file, then we use '>>

shuf ./words | head -5 >> ./words01 - append 5 random lines with each run (file 'words01' is created if does not exist)This is helpful when we want to track information in time (so called log files) - we usually put a time stamp at the beginning of each output line

Compiling and cutting .csv files

Create 3 files with random samples from the words file

shuf words | head -5 > mywords01``shuf words | head -5 > mywords02``shuf words | head -5 > mywords03Merge files into one where each line is created by comma separated values from respective lines of other filespaste -d ',' mywords01 mywords02 mywords03 > data.csv - command paste does exactly what we need, but by default uses TAB for separating values, so we ask to use ',' (comma) with -d parameterpaste -d ',' mywords* > data.csv - this is equivalent to the above, we use here '*' (star, asterisk, wild-card) that BASH interpreter will expand to the list of possible filenames (like if you press TAB key when entering the command)

To display only certain fields from a file if we know the separator (delimiter)

cut -d ',' -f 2 data.csv - print second field on each linecut -d ',' -f 1,3 data.csv - print first and third field on each linecut -d ',' -f 1-3 data.csv - print a range of fields, in this example first three fieldsHINT: if you use '-c N-M' instead of -f and omit '-d' input is cut on individual characterscut -c 5-10 words - display characters from 5th to 10th

Useful text processing commands for pipes

sort - use in command pipeline to sort inputuniq - use in command pipeline to remove consequential duplicitieswc -l - use at the end of a command pipeline to display number of lines

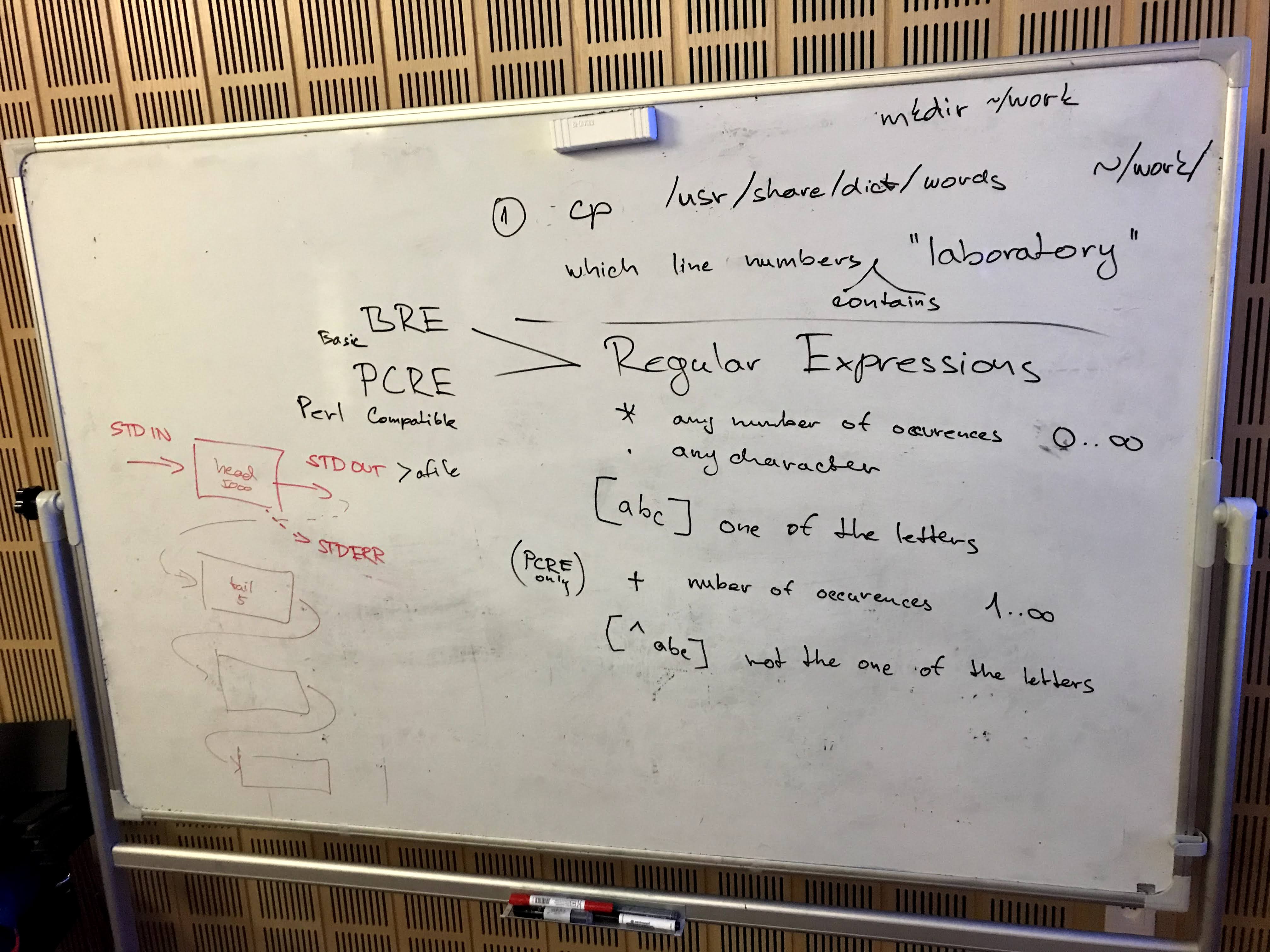

Regular Expressions (regexp, RE)

RegExp is a way to formulate string patterns with great flexibility, it is not only in grep command, RegExp knowledge is transferable to all programming and scripting languages.RegExp have several definitions - in grep by default is BRE (Basic), wide standard is using PCRE (Perl Compatible) - to use PCRE with grep use grep -P (not by default due to higher CPU demand - not recognizable on our scale, use by default)'.' is any character[abc] set of possible letters[^abc] set of excluded letters(abc|def) set of possible strings - we call these groups'?' optional presence of previous character'*' optional and multiple presence of previous character'+' at least one occurrence of the previous character (this needs PCRE)

Regular Expressions Cheat Sheet: regexp_cheat_sheet.pdf - by Dave Child from https://cheatography.com

Self-study

- save lines 2000-5000 into a file called "input_data"

- from "input_data" file list all lines not ending with "s" (bonus: only not ending with "'s" - apostrophe and "s")

- from "input_data" file extract third and fifth letter (extra: use 2. instead of "input_data")

- same as 3. and count unique combinations

- can you process all above in a single command?

- can you save your command into a file called "script.sh"? (hint: echo command will come handy)